The Power of Caching Training

News

The Power of Caching – Training Recap

On Thursday, April 3, 2025, the development team at S3Corp. gathered to explore and discuss the topic of caching under the internal knowledge-sharing series. The session, titled “The Power of Caching,” provided a deep dive into how caching works, why it matters in software performance, and how developers can apply various caching strategies efficiently.

03 Apr 2025

The Power of Caching Training – S3Corp.

On Thursday, April 3, 2025, the development team at S3Corp. gathered to explore and discuss the topic of caching under the internal knowledge-sharing series. The session, titled “The Power of Caching,” provided a deep dive into how caching works, why it matters in software performance, and how developers can apply various caching strategies efficiently.

This training event aligned with the company-wide value of continuous

learning and shared technical growth. S3Corp. encourages regular internal

sessions to ensure engineers stay current with essential development practices

and are empowered to apply them effectively in real-world projects.

This training event aligned with the company-wide value of continuous

learning and shared technical growth. S3Corp. encourages regular internal

sessions to ensure engineers stay current with essential development practices

and are empowered to apply them effectively in real-world projects.

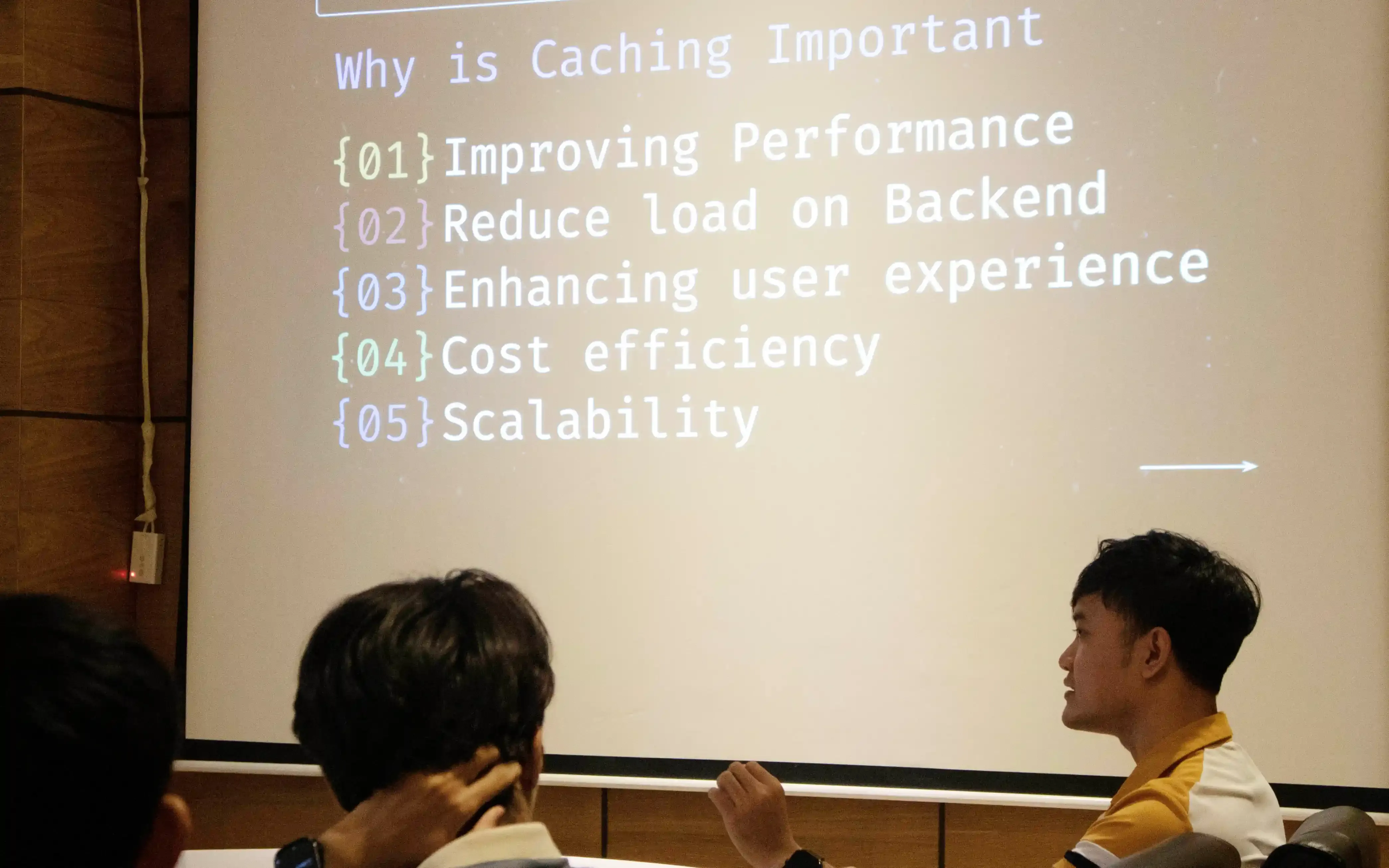

Understanding Caching: What It Is and Why It Matters

The session began with a clear explanation of caching. Caching is a technique used in computing to store copies of data in temporary storage, or cache, so future requests can be served faster. By avoiding repeated calculations or database calls, caching reduces response time and improves user experience.

The speaker highlighted the main reasons why caching is widely used. First,

it significantly improves performance by reducing latency and computational

load. Second, it helps systems scale better by reducing the need for redundant

processing. Third, it contributes to cost efficiency in both hardware resource

use and user-facing performance.

The speaker highlighted the main reasons why caching is widely used. First,

it significantly improves performance by reducing latency and computational

load. Second, it helps systems scale better by reducing the need for redundant

processing. Third, it contributes to cost efficiency in both hardware resource

use and user-facing performance.

Several use cases were shared to show how caching is applied across different environments. These included database caching to reduce read load, web content caching for faster page delivery, API response caching to limit backend traffic, and CDN caching to bring content closer to users geographically. The presentation also covered common types of caching such as memory caching, disk caching, distributed caching, and application-level caching.

Caching Strategies: Read and Write Patterns

The core of the training focused on the various strategies developers can use when implementing caching. Caching strategies depend on system requirements and expected behavior under load.

Read strategies included the basic cache-aside pattern where the application first checks the cache and only queries the database if the data is not found. This approach offers flexibility but places the responsibility on the application to manage cache hits and misses.

Another read pattern discussed was read-through caching, where the cache acts as a transparent layer between the application and the data source. If the requested data is missing from the cache, it automatically fetches it from the original source and stores it for future requests.

Write strategies included write-through caching, where data is simultaneously written to both the cache and the data source, ensuring consistency but possibly slowing down write operations. Another approach was write-behind caching, where the application writes to the cache immediately, and the data source is updated later. This improves write performance but introduces a risk of data loss if the cache fails before writing back.

The session explained that choosing the right strategy involves a trade-off between consistency, latency, complexity, and failure tolerance. Developers were encouraged to evaluate each use case before applying a strategy.

Challenges When Using Caching

While caching offers performance benefits, the training emphasized that it introduces technical challenges that need proper handling.

One major concern is cache invalidation — deciding when to remove or refresh stale data from the cache. Without a clear invalidation policy, applications risk serving outdated data to users. Various methods, such as time-to-live (TTL), manual eviction, or cache versioning, were mentioned as solutions depending on the situation.

Another challenge is cache consistency. In distributed systems, keeping multiple caches synchronized is complex. If not managed properly, this may lead to inconsistent data views across different application nodes.

There is also the issue of cache stampede. When many requests try to access the same uncached data simultaneously, this creates high load on the backend. Developers can address this using locking mechanisms or request coalescing techniques.

Memory limitations were discussed as a technical constraint. Caches are stored in finite memory, and inefficient cache management may cause useful data to be evicted prematurely. Effective cache replacement policies such as LRU (Least Recently Used) or LFU (Least Frequently Used) help maintain balance.

Golang Demo and Development Best Practices

To put the concepts into practice, a demo was delivered using Golang, focusing on implementing a simple caching mechanism. The presenter showed how to build a basic in-memory cache using Go’s native data structures.

The live coding session covered how to implement TTL-based expiration, store key-value pairs, handle concurrency using mutexes, and design reusable caching functions. Emphasis was placed on writing clean and readable code that can be adapted across projects.

The team explored how caching fits into service-oriented architectures and microservices. Best practices were shared around cache observability, such as tracking hit/miss rates and integrating monitoring tools to evaluate cache performance.

Suggestions were given on when not to cache, such as dynamic data with short lifespans or sensitive data that must always come from a secure source. Logging and metrics were encouraged to analyze caching effectiveness and make informed decisions about its use in production.

Continuous Learning At S3Corp.

S3Corp. invests in internal training tobuild a strong technical culture and promote shared learning. Each session reinforces practical knowledge that supports better engineering decisions and project outcomes. The Power of Caching session reflected this goal clearly—equipping engineers with both the theory and hands-on application of a fundamental performance tool.

Training like this not only helps teams deliver more responsive systems but also builds a habit of thoughtful architecture decisions. Regular sharing sessions are a part of S3Corp.’s engineering rhythm, supporting a culture where learning never stops and everyone contributes to team growth.

_1746790910898.webp&w=384&q=75)

_1746790956049.webp&w=384&q=75)

_1746790970871.webp&w=384&q=75)